Taming Machine Learning with Software Update 20

Dear Backers,

This is the explanatory article to complement the new interactive machine learning from software update 20.

When it comes to nudging you when you are distracted, or to take a rest, or even generating biofeedback in real time, to help you peak mental performance, these all depend on FOCI's ability to accurately differentiate your breathing physiological characteristics. People can be tall, short, fat or thin, similarly physiological characteristics of breathing also vary from person to person. Even for the same person, under different conditions, physiological characteristics of breathing can become very different.

Therefore FOCI requires training, that is, machine learning, in order to accurately recognize emotion states. Training FOCI is actually very simple, just follow the guide on wearing FOCI correctly to get good signal, the training will start automatically.

The Gap

Automatic machine learning is very sensitive to ‘false' data, for example, if you wear FOCI to sleep, this sleep physiology data will be learnt instead, skewing machine learning. In the past, you would have to reset machine learning process again to regain the accuracy.

In a nutshell

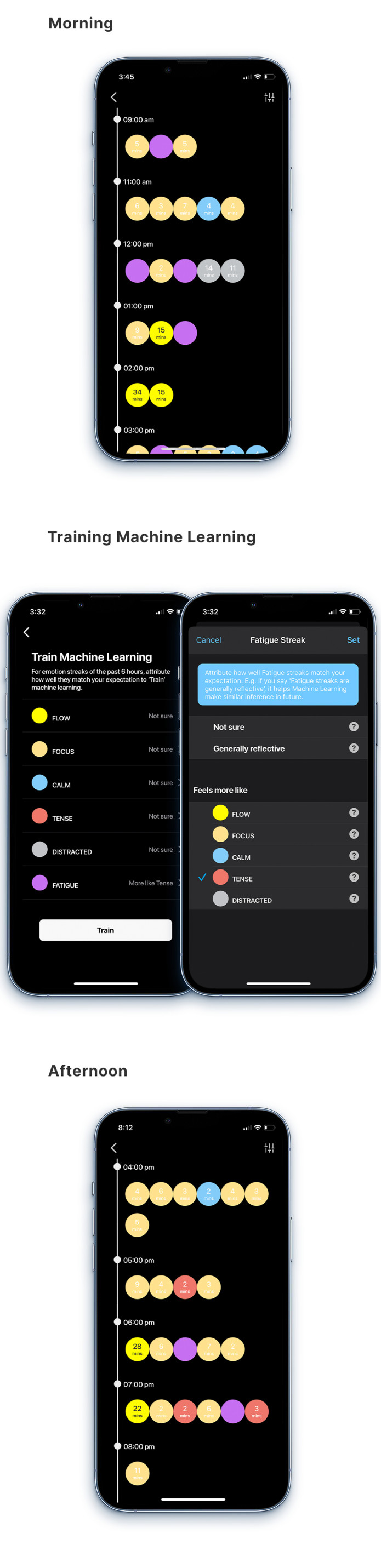

- Interactive machine learning allows you to calibrate machine learning accuracy / sensitivity to emotion state detection.

- Your input can stabilize machine learning against data skewing.

Precaution

Our physiological states are not static, transiting in cyclical manner, and affected by seasonal changes and health. Once you give your input, this could render FOCI less sensitive to accommodate for genuine physiological changes that it would otherwise be taken into account. So you may need to adjust training once in a while.

Let’s get the show on the road

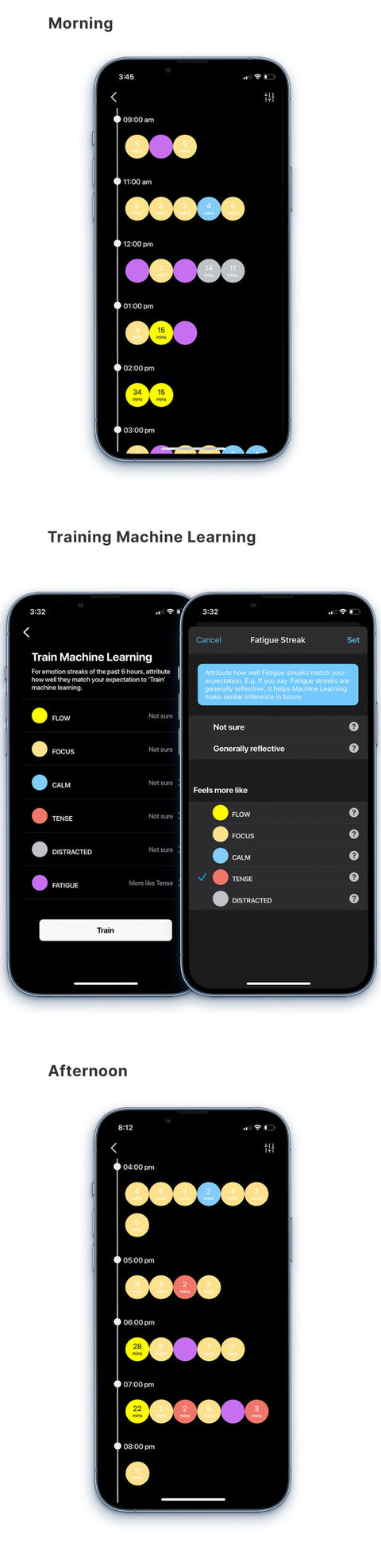

This is my little experiment - I feel like I’m more stressed than what FOCI tells me, and I’m ok with type 2 errors (false positives). So I decided to tell FOCI that my distractions are more like fatigue. And voila!

Best wishes,

Mick and the FOCI Team